”LLM grooming” refers to the deliberate manipulation of large language models (LLMs) by flooding their training data with disinformation, aiming to bias their outputs towards specific narratives. It is a specific type of data poisoning.

Simplified, it goes a little like this: bad actors will publish thousands of articles on hundreds of websites. (Probably written, ironically, by an AI chatbot.) These websites may not be read by many humans, but the articles on them get sucked up into the datasets used to train AI chatbots like ChatGPT, Gemini and Claude. For the chatbot, this particular narrative then statistically outweighs the true narrative, due to the sheer number of articles it is finding that state this false narrative to be true.

Credit for finding this neologism goes to Mignon Fogarty a.k.a. Grammar Girl who found the word in this article and was kind enough to email me about it!

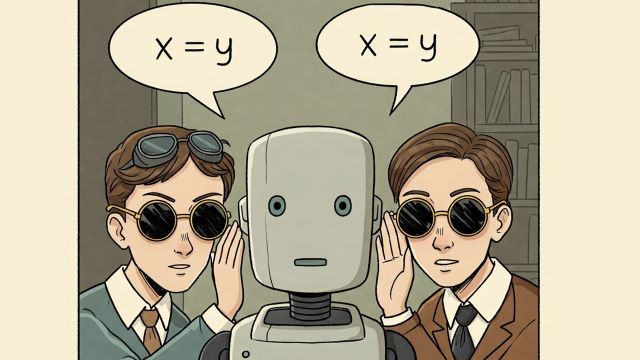

The image for this article was of course generated by AI – oh, the irony!

Heddwen Newton is an English teacher and translator. She is fascinated by contemporary English and the way English changes. Her newsletter is English in Progress. More than 2300 subscribers and growing every day!